The World of

AI at Work in 2026

Bottom-Up AI, Browser-First Work, and the New Gap Between Productivity and Control

Executive Summary

AI at work is now the default.

Employees across industries are already using AI tools every day, often outside formal programs or IT visibility. Meanwhile, leaders face growing pressure to “scale with AI,” even as governance lags. Most organizations simply do not yet have the proper policies, monitoring, or architectures in place to make secure AI adoption possible.

Browsers are the new AI workplace.

Most work now happens in web apps and SaaS in a Chromium-based browser, often with built-in AI features. That puts identity, data, and AI flows at the “last mile,” where humans interact on managed and unmanaged devices. Now, in addition to securing back-end systems, securing AI at work means securing what happens in the browser.

Attackers are using AI as a force multiplier.

While enterprises debate pilots and policies, attackers use AI to write better phishing emails, generate deepfakes, automate reconnaissance, and exploit gaps at the edge. With traditional rule- and signature-based defenses struggling to keep pace, forward-thinking security leaders are sharing more AI risk ownership with the rest of the business, modernizing their stack around the browser, and using AI to accelerate success.

AI at Work in 2025

AI should amplify our intelligence, not outsource our cognition. The real test for leaders is whether they can help people learn with AI, not just control it.

Bottom-up AI adoption outpaced top-down governance.

Over the last two years, AI graduated from the experimentation phase to something most employees use every day. For many organizations, adoption has been bottom-up, with individuals testing free tools, pasting in work content, and wiring AI into their own workflows long before policies or controls were ever put in place.

Such an approach delivered immediate productivity gains—but it also created a governance vacuum. In fact, over half of U.S. office workers say they are willing to violate company AI policy to make their jobs easier, and roughly a third say they would quit if their employer banned AI entirely.

Shadow AI became the new shadow IT.

Shadow AI is perhaps the most visible symptom of bottom-up adoption. Across organizations, employees feed contracts, source code, customer data, or strategy decks into public chatbots and plug-ins, often hosted in unknown jurisdictions and almost never under contractual control.

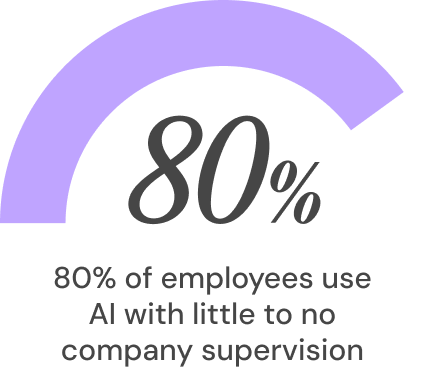

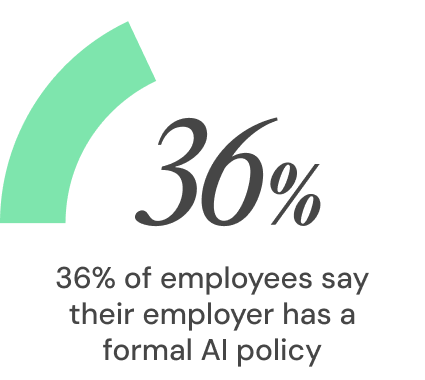

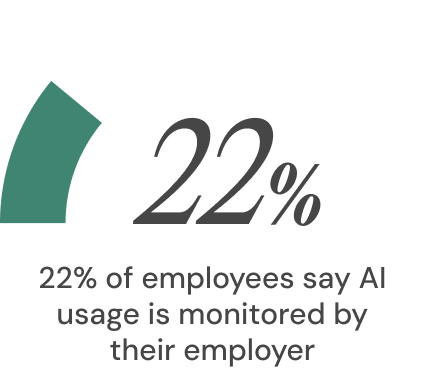

Last year, 80% of staff reported AI use with little to no company supervision. Meanwhile, only 36% say their employer has a formal AI policy and just 22% say their usage is monitored. Yet more than 60% rely on free, public AI tools to do their jobs, and more than a quarter say they would use AI even if it were formally banned.

By 2027, Gartner predicts that 75% of employees will acquire, modify, or create technology outside IT’s visibility—up from 41% in 2022. However, because these tools live “one tab away” and require no installation or ticket, they often spread faster and touch more sensitive content than older shadow IT ever did.

Shadow AI is fundamentally just people sending organizational data to third parties without agreements or controls.

AI browsers, data, and legacy infrastructure collided.

Today, most interactions with AI occur via browser tabs: copilots built into SaaS, public chatbots, AI plug-ins, and internal assistants delivered via web. With Chromium-based browsers accounting for more than 75% of global usage, most browsers introduce identity, data, and workflow risks exon browsers legacy endpoint DLP and network controls have limited visibility—especially on unmanaged or third-party devices.

Now, over half of all company data is accessed on a consumer browser. At the same time, 68% of companies report experiencing data loss from attacks originating at endpoints, a pattern that often begins in the browser as users interact with SaaS, cloud apps, and AI tools. Not to mention, one quarter of all malware collected in 2024 was designed specifically to steal user credentials.

Meanwhile, legacy infrastructure is struggling to keep up with these new expectations. In public-sector and healthcare environments, for instance, critical applications still live inside fragile VDI setups built to contain old software rather than enable AI-assisted work. Now, that gap between legacy experience and AI-enabled expectations is growing too big to ignore.

Tech debt isn’t hidden—it’s overwhelming. VDI became the default answer for ‘old app, new environment.’ But it’s a terrible UX and an expensive crutch. Enterprise browsers let you tear down that crutch without breaking the leg.

AI risks shifted from theoretical to practical.

In 2025, real-world issues like prompt injection, data leakage into public models, AI-assisted fraud, and regulatory exposure around data residency and IP became even more pressing. Leading up to last year, most organizations simply hadn’t realized they didn’t have the guardrails to keep up with how AI was actually being used.

If you’re not breaking and inspecting TLS streams, you’re already blind to what employees are sending to AI services.

Data leakage into uncontrolled models.

AI tools that train on user inputs can inadvertently absorb sensitive information and surface it to other users or use it to train future models. Already, one in three breaches result from shadow data, and 68% of breaches involve a non-malicious human element such as phishing or accidental mishandling.

AI-assisted phishing and fraud.

Attackers can use AI to generate highly personalized, fluent, and context-aware messages at scale, adjusting in real time based on which messages get clicks. In some experiments, AI-generated phishing emails were even more effective than human-written ones and much faster to produce.

AI-generated phishing emails are already more effective than human-generated ones and much faster to produce. You can’t just train users to ‘be more careful’ and call it done.

For organizations that consume LLMs through an API, the big risk is prompt injection. Everything else I’d qualify as basic hygiene and traditional attacks.

Prompt injection and agent subversion.

With 20–25% of generative-AI systems susceptible to some form of prompt injection, bypassing intended constraints and co-opting unguarded agents and copilots is relatively easy.

Deepfakes and multi-channel social engineering.

Deepfake audio and video, trained on social feeds, public appearances, and leaked calls, make it easy to mimic executives, family members, or colleagues. That collapses long-standing heuristics like “I recognize their voice” or “I saw them on camera” and forces people to rely on methods like out-of-band verification for anything involving money or credentials.

Attackers are also experimenting with using AI for reconnaissance and exploit development, including combining dark web data, open-source intelligence, and social media to generate bespoke attacks against high-value targets. Now, the same AI that makes workers more productive is making adversaries faster and more adaptive.

It’s not fair to put the responsibility on people to pick up on deepfakes—anyone can be fooled. We need AI tools that counter what AI-driven attackers can do.

AI at Work in 2026

Enterprise browsers as the AI workplace.

As the primary interface where people access data, trigger workflows, and engage with AI, browsers are becoming the natural control plane for AI at work. However, unlike other browsers, enterprise browsers embed controls—DLP, isolation, identity protections, and policy enforcement—directly into the experience without modifying SaaS or AI tools themselves. That way, organizations can monitor prompts, block sensitive fields from flowing into public AI, and enforce approvals for risky sites or extensions, all while keeping the UX familiar.

With even more applications and AI tools moving to the browser in 2026, many organizations are already retiring or shrinking expensive legacy stacks like VDI, VPN, and secure web gateways. That makes it easier to deliver governed AI experiences to any device, anywhere, without shipping hardware or building complex tunnels.

In fact, according to Gartner, 1 in 4 organizations will augment secure remote access and endpoint security tools by deploying at least one secure enterprise browser technology by 2028. And by 2030, enterprise browsers will be the core platform for delivering workforce productivity and security software on managed and unmanaged devices.

Some organizations have even achieved a 344% return on investment, saved 12 minutes per employee per day, and reduced security risk exposure by up to 90% with enterprise browsers like Island.

We’ve gone past the days where you trust your computers and let them go wherever they want on the internet. Move to enterprise browsers you control.

Human work, AI, and culture.

In 2026, AI will be a daily co-worker for many employees—drafting emails, summarizing meetings, suggesting code, or answering domain questions. But that shift will also continue to change how people think about trust, autonomy, and rules at work.

In fact, 45% of employees now trust algorithms more than their coworkers, and over one-third would prefer to report to an AI manager. Meanwhile, over half of U.S. office workers—and more than two-thirds of C-suite executives—are willing to violate their own company’s AI policies to make their jobs easier. With or without formal approval, it seems many of the people closest to the work are making de facto decisions about AI risk and governance.

Beyond the technical realm, leaders have to meet this reality on human terms, too. People need to understand why certain AI uses are dangerous and where the guardrails are—not just what’s forbidden. That means translating prompt injection, data residency, and model drift into digestible narratives about reputation, jobs, and trust. It also means designing with learning in mind: letting people experiment safely, in monitored environments, with AI tools they can trust and are allowed to use.

Many security teams are shifting from blockers to AI enablers in response. Historically, security was perceived as the function that said “no” or slowed things down. In the AI era, teams that simply say “no” will be bypassed via shadow AI. The ones that thrive are building principles, processes, and platforms that let teams adopt AI safely, with visibility and control—and they’re doing it fast enough to meet business demand.

In tomorrow’s world, we need to separate the context we feed the model from the workflow that acts on its output and make those flows deterministic.

The World of AI at Work:

2026 Playbook

1. Start with the browser.

Begin by discovering how AI is already being used inside the browser.

Use enterprise browsers or browser-based controls to instrument this usage. The goal is not to eliminate unsanctioned tools overnight, but to replace invisible, high-risk usage with governed, visible usage over time.

Determine the ratio of AI usage through approved, monitored channels versus everything else, and track that trend by department and role as you roll out alternatives.

Which AI sites, extensions, and in-app copilots are people using?

From which devices (managed, unmanaged, third-party)?

Against which data domains (customer, finance, HR, code, IP)?

2. Treat browsers like an AI firewall.

Assume that most meaningful AI-related data flows and identity events start or end in the browser.

Design policies that are specific (e.g., “no customer PII to public AI”) and enforceable in the browser, rather than generic “be careful” guidance. Use browser telemetry to understand where policies are too lax or too strict, then iterate.

Inspect prompts and responses for sensitive content before they leave your environment, blocking or masking where necessary.

Control credential use and session handling in the browser to prevent phishing, keylogging, and infostealer-driven takeover across apps.

Apply isolation or step-up verification to risky sites and tools, rather than hoping users will notice subtle differences in URLs or trust banners.

1

3. Assign real risk ownership.

Beyond a list of forbidden tools, AI governance is a repeatable pattern.

Make governance visible by publishing your AI principles internally and, where appropriate, externally. Consider establishing an AI committee with representation from security, legal, data, HR, and key business lines. Use this group to prioritize AI investments and decommission high-risk patterns.

Principles.

Agree on what you will do with AI (e.g., assist humans, not fully automate high-stakes decisions) and what you won’t (e.g., train public models on proprietary data).

Process.

Define how new AI tools, agents, and use cases are evaluated, approved, and monitored—including legal review, data classification, threat modeling, and pilot phases.

Ownership.

Explicitly assign risk owners for AI systems and workflows: product, data, operations, or business leaders, with security as co-owner and advisor—not sole scapegoat.

1

4. Tie AI risks and controls to existing work.

Don’t treat AI risk as a separate silo. Instead, fold it into the frameworks and operations you already use.

Establish a rhythm for purple-teaming and tabletop exercises that explicitly cover AI scenarios—both attacks and failures of internal agents—and use the results to refine controls and training.

Map AI-specific threats and controls into MITRE ATT&CK, MITRE Atlas, and your internal risk registers.

Update detection content and playbooks to account for AI-assisted attacks (e.g., prompt injection, anomalous agent behavior, deepfake-assisted social engineering).

Use AI assistants in the SOC to summarize alerts, enrich cases, suggest queries, and automate low-risk actions—but keep humans in the approval loop.

Integrate AI-related checks into CI/CD, like scanning AI-generated code for vulnerabilities, enforcing infrastructure-as-code policies, and implementing guardrails for AI usage in pipelines.

1

5. Design AI around vertical workflows and human roles.

Avoid scattering AI pilots everywhere. Instead, focus instead on high-value workflows per business unit. Then, use metrics to decide where to expand, refine, or retire AI use cases.

For each workflow, define where AI sits (in which app or browser context), what data it can see and cannot see, what it can do (read, write, act) and what requires human approval, and how success will be measured (e.g., time-to-resolution, error rates, satisfaction, risk reduction).

5.1

In customer service.

Copilots that sit alongside agents in the browser, pulling context safely from approved systems.

5.2

In finance.

AI that assists with reconciliations, forecasts, or ALM scenarios—but does not fully automate approvals without human review.

5.3

In healthcare and digital health.

AI that drafts notes, flags anomalies, or surfaces similar cases for clinicians, not tools that act autonomously on patient data.

5.4

In operations and security.

AI that triages events, drafts responses, and proposes actions—then routes to humans for decision and execution.

1

6. Use AI to defend against AI.

As attackers continue to use AI, defenders will need to do the same.

Treat these defensive AI systems as products—instrument them, monitor false positives and false negatives, and pair them with human feedback loops so they improve over time.

6.1

Deploy AI-driven email and web protections that detect subtle patterns in language, layout, and behavior that humans can’t reliably see.

Use browser-layer automation to enforce policy in real time—blocking sensitive uploads, neutralizing credential prompts, and enforcing session hygiene.

Apply anomaly detection to agent behavior: which actions are unusual for a given agent or user, given time, context, and history?

Feed SOC and threat hunting teams with AI-assisted analysis across logs, cloud telemetry, and browser events so they can focus on higher-order investigation.

AI at Work in the Real World

Frontline and distributed work.

Retailers, BPOs, logistics companies, and global workforces were already wrestling with slow, brittle VDI setups long before AI. Rolling out secure access often meant shipping hardware, configuring VPNs, and accepting laggy user experiences. But that made it difficult to experiment with AI tools on the frontline, even as expectations rose.

Now, more recent case studies also show a different pattern emerging:

- A national retailer with thousands of locations replaced VDI with Island on Surface tablets and went live during the Memorial Day peak period. Getting all stores online in weeks and decreasing average transaction times led to more sales per day.

- Mattress Firm deployed Island to 2,400 stores and 7,000 devices in under two weeks, replacing VDI with a faster, more user-friendly browser-based solution for point-of-sale tablets.

- Teleperformance secures a 500,000-employee workforce across 170 countries using Island as employees’ “window into both corporate and client service environments,” reducing VDI reliance and extending endpoint lifecycles.

In all of these instances, the browser became the unified application surface and the enforcement point for data protection and access controls. When AI copilots and agents can be delivered directly within a governed browser environment, they can help frontline workers handle more complex interactions without exposing data to uncontrolled tools.

Sports and gaming.

Sports and gaming organizations also combine some of the most demanding conditions for AI-at-work: real-time decision-making, massive telemetry, and highly diverse roles—from engineers and analysts to creatives and operations. And when milliseconds and small errors translate directly into lost races, broken experiences, or toxic communities, the stakes are immediate.

Studios and platforms are already exploring how to embed AI agents into games and services—for hints, NPC behavior, personalization, and live operations. As those same agents become part of the attack surface, however, they must be treated like any other critical component.

On the sports side, teams like Hendrick Motorsports show how browser-delivered security can support AI-ready, data-intensive work without sacrificing speed. Hendrick replaced legacy security tools like Zscaler and reduced VPN usage—saving over $100K per year—all by standardizing on Island’s enterprise browser.

This is a massive opportunity, but the product itself is potentially an AI product. We have to secure the AI agents and the data they touch just like any other critical system.

Highly regulated and data-sensitive sectors.

Financial services, healthcare, higher education, and digital health organizations are applying AI where risk, regulation, and trust truly intersect. Here, data and models are considered sovereign assets, governed where data lives, how models are trained, and who can act on AI outputs.

To protect HIPAA-covered data in fully virtual care models, simplify IT, and reduce costs—all while preparing to integrate AI into virtual care workflows safely—digital health organizations like Omada Health are choosing Island. Meanwhile, banks like the Bank of Marion are using Island to build browser-layer RPA modules for multi-system workflows—achieving higher efficiency and zero human error—paving the way for AI-enabled automation in similar patterns.

We’re on the cusp of a huge transformation. Data accuracy, ownership, and stewardship aren’t just compliance issues. They’re mission-critical to how AI will shape the balance sheet.

Financial Services

In financial services, leaders are combining ALM, risk, and AI agendas. Data sovereignty, governance, and cloud strategy are now fundamental to how they think about AI: classifying data, building “sovereign cores,” and measuring initiatives by their impact on risk, capital, and returns.

Banks like the Bank of Marion are using Island to build browser-layer RPA modules for multi-system workflows—achieving higher efficiency and zero human error—paving the way for AI-enabled automation in similar patterns.

Healthcare

AI is already embedded in clinical workflows across healthcare organizations. Imaging AI enhances low-dose scans to detect early tumors, machine learning supports clinical decision-making, and AI-powered virtual care scales counseling and care coordination.

Still, models are only as good as the data they’re trained on, especially with misinformation, disinformation, and incomplete datasets to poison training data, creating biased or unsafe recommendations.

To protect HIPAA-covered data in fully virtual care models, simplify IT, and reduce costs—all while preparing to integrate AI into virtual care workflows safely—digital health organizations like Omada Health are choosing Island.

Precision medication and clinical decision support will rely entirely on AI models; if those models are trained on corrupted or biased data, the consequences aren’t just financial—they’re life and death.

Higher education has one of the broadest threat landscapes and a mandate to be as open as possible—those two facts constantly collide.

Higher Education

Faculty and students began using AI daily for research, writing, and analysis ahead of formal rules. In 2024, use of generative AI by higher-ed faculty nearly doubled to 45%, while 86% of students reported using AI in their studies and 24% said they use it daily. Today, those behaviors and expectations are flowing into the enterprise.

Now, higher education institutions like ASU are adopting internal “walled-garden” AI (Create AI) and secure browsers to enable experimentation while protecting research IP and sensitive student data. That pattern—providing internal AI with strong browser-level controls—offers a model for K-12 institutions, too.

Public sector and critical services.

Public-sector agencies and critical infrastructure operators operate under similarly intense regulatory scrutiny and unique constraints. They often carry heavy tech debt: decades-old applications, mainframes, and custom systems kept alive via VDI and VPN.

Now, these environments are facing both AI-enabled threats and AI-enabled expectations. Attackers can use AI to move faster through known weaknesses, while internal teams want AI tools to help them cope with complexity and volume.

In response, leaders in these environments are modernizing in layers:

- Using secure enterprise browsers to consolidate access and controls for employees and contractors without rebuilding every back-end system.

- Incrementally replacing VDI with browser-based, policy-driven access to SaaS and legacy apps, reducing complexity and unlocking AI-ready interfaces.

- Adopting frameworks like MITRE ATT&CK and MITRE Atlas and mapping them into AI-assisted SOC operations to close the detection and response speed gap.

State-level organizations are similarly rethinking defense layers: embracing continuous monitoring, shifting DLP and identity controls closer to the browser, and exploring containerization to limit blast radius as AI-enabled threats probe their environments.

AI doesn’t change what attackers want to do. It changes how fast they can do it. Our job is to close that speed gap—prevent where we can, and detect, respond, and recover faster where we can’t.

Software, developers, and AI-native organizations.

Developer-heavy organizations were early adopters of AI for coding, debugging, and documentation. Now, they face a different set of challenges: controlling where code goes, managing access tokens and secrets, and ensuring AI-generated code meets security and quality standards.

Companies like Sonar, with 7 million global developers, are moving internal tools and partner access behind secure browsers. That way, employees and contractors are forced through governed paths even as they use AI-assisted dev tools. Security leaders in similar environments are adopting practices such as:

- Scanning AI-generated code for vulnerabilities as part of CI/CD.

- Mapping exposure from GitHub and other repositories into unified risk models.

- Using AI to assist threat modeling, infrastructure-as-code scanning, and policy-as-code authoring.

This is the group most likely to experiment with AI agents that act across multiple systems—further increasing the importance of agent identity, delegation, and governance.

The hardest problem is getting people to care enough to build with AI in their own workflows.

The New World of AI at Work

In 2026 and beyond, the most effective organizations will treat the browser as the place where AI, data, and humans meet. As the default workplace where most tasks are completed, it will inevitably become the enforcement point where policies are interpreted and applied, as well as the observability layer where AI usage, data flows, and session behaviors are logged and understood.

Securing that layer—while making it the easiest, most powerful way to work—will be key to adopting AI at scale without losing control. Models will continue to improve and tools will keep multiplying. Instead, the real differentiators will be:

Governance.

Clear principles, accountable owners, and repeatable processes for AI systems.

Identity and access.

Treating AI agents as first-class identities with scoped permissions, delegated by humans, and fully auditable.

Human-first design.

Building AI around people and vertical workflows, investing in literacy, and treating agents as colleagues with responsibilities, not opaque magic.

Signals from the Field

Methodology

This editorial report draws on a mix of quantitative data, Island customer stories, and practitioner interviews to reflect how AI at work is actually unfolding in the browser.

Over the past six months, we conducted in-depth interviews with executives and practitioners across industries: financial services, healthcare, higher education, gaming, manufacturing, public sector, energy, and technology. Many of these conversations also inform standalone articles in trade publications.

Then, we synthesized findings from Island’s own research, customer case studies, and internal telemetry with third-party quantitative studies. Together, these sources provided the quantitative backbone of the report: how widely AI is used, where it is used, where data actually lives, and how often incidents now originate in the browser.

Finally, the editorial team compared themes across sources to identify where AI is already delivering value, where it’s increasing risk, how attackers are using it as a force multiplier, and what leading organizations are doing differently at the browser, governance, and operational layers.

The result is a 360-degree snapshot of AI at work in 2026 and a practical stance on what leaders can do next.