Aby Rao, Deputy CISO at an HCM SaaS company, calls for stronger governance and responsible AI as smart glasses challenge privacy and public trust.

Organizations that put out AI-enabled devices and applications are at higher risk because, as custodians, it's their responsibility to manage a large volume of information. These technologies collect and process enormous amounts of personal data, making them prime targets for attackers. A bad actor looking to maximize their effort will always go after the biggest trove of data rather than individual users.

Artificial intelligence has leapt from software into everyday devices, advancing faster than the rules meant to keep it in check. Smart glasses and other AI-powered tools promise new levels of convenience, yet they also raise urgent questions about privacy, consent, and control. The challenge for security leaders is to govern this new wave of technology responsibly before trust becomes the next casualty of progress.

It’s a gap that cybersecurity leaders like Aby Rao are now tasked with closing. The current Deputy CISO at an HCM SaaS company and formerly a Director at KPMG and member of the Harvard Business Review’s Cybersecurity Advisory Board, Rao believes the industry’s traditional approach to technology is no longer built for the AI era. The core problem, he says, is a widening divide between the speed of innovation and our collective ability to keep up.

"When we're looking at AI development, it’s far exceeding AI education and AI awareness. The technology is racing ahead, but the understanding of how to use it responsibly is still miles behind. As a security professional, it’s my job to close that gap before the consequences outpace our ability to manage them," Rao says. So who’s really on the hook when data is breached? Rao places the ultimate burden of responsibility firmly on the organizations that act as custodians for vast amounts of sensitive information.

The bigger prize: "Organizations that put out AI-enabled devices and applications are at higher risk because, as custodians, it's their responsibility to manage a large volume of information. These technologies collect and process enormous amounts of personal data, making them prime targets for attackers. A bad actor looking to maximize their effort will always go after the biggest trove of data rather than individual users," Rao explains.

Trust starts at zero: Rao's philosophy is built on a single, powerful premise: in an environment of inherent skepticism, organizations must proactively earn trust by proving they are worthy partners. "Trust starts at zero. I don’t trust any organization by default, and neither should users," he says. "It’s up to companies to earn that trust by providing clear, consistent signals that show they’re acting responsibly. Real security is built through partnership, not assumption."

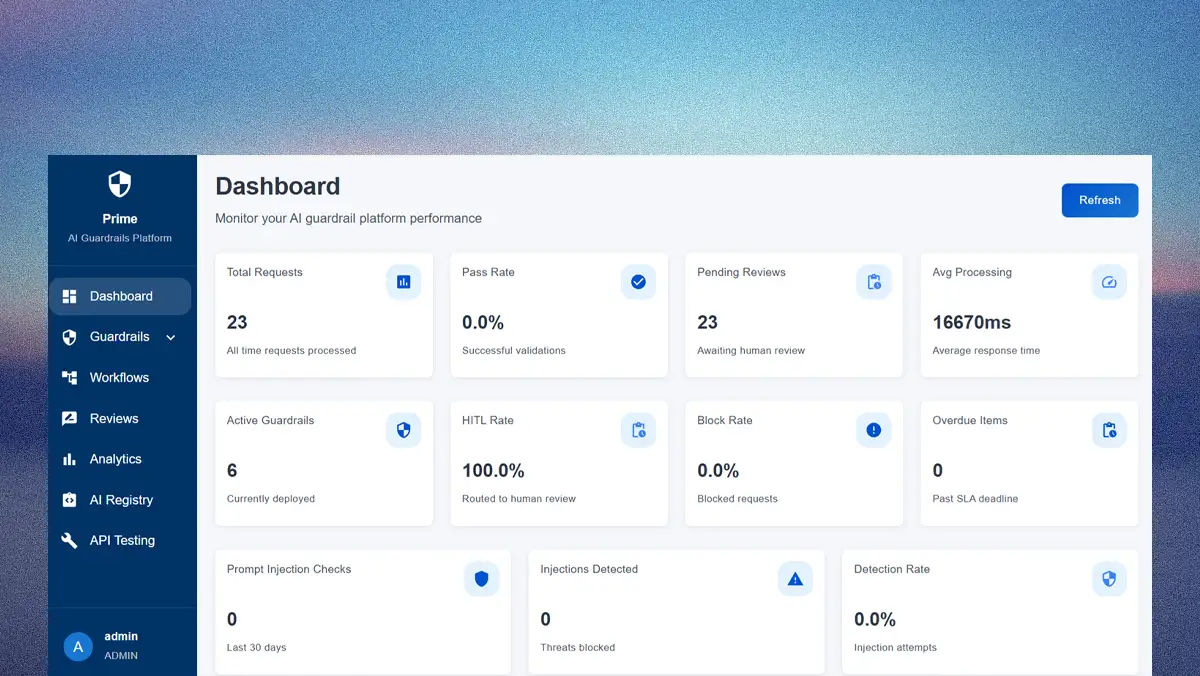

The solution, according to Rao, lies in a responsible AI framework that calls for companies to expand their focus to include fairness, bias, and a new, two-tiered model of transparency. At the macro level, this means communicating clearly through accessible channels like blogs and podcasts, moving beyond a sole reliance on tedious privacy policies. At the micro level, he believes users should be given direct control through data dashboards and the ability to store their information locally.

The train has left: Among the latest wave of AI-powered devices, smart glasses stand out for how seamlessly they blend technology into daily life. Rao sees them as both a breakthrough and a warning sign. "The 'normalization of surveillance' train left the station a while ago," he explains. "Even before smartphones, CCTVs were everywhere, and the consent to be recorded has not really been applied."

Recording in progress: In response, Rao suggests that ethical principles can be made tangible through a two-pronged design strategy: providing clear social cues for bystanders and building systemic trust for the user through better data policies. "We need simple physical cues, like the old red recording light, to show when these glasses are active. People deserve the chance to know, react, or step away. And not every recording should live forever—data should expire by default, with users deciding what’s worth keeping."

But when things go wrong and privacy is violated, Rao calls for a systemic approach to accountability. Solving the challenge requires a chain of responsibility that extends from the user to the organization and, critically, to external governing bodies.

The chain of command: "This challenge requires a multi-layered, shared responsibility model. It starts with the individual user and their societal responsibility. It then moves to organizations, which must have controls in place. Finally, there must be a layer of governance from regulatory bodies like SOC, PCI, and HIPAA," says Rao.

Enter the regulators: "The regulatory piece is key, especially when it deals with human well-being and physical safety. There has to be strong, continuous governance from an external entity that organizations are accountable to. Gadgets are being released left and right, but it's unclear how many are truly audited or assessed before they hit the market."

Ultimately, Rao argues that the era of self-regulation has run its course. Protecting individual safety, he says, requires clear rules, active enforcement, and spaces where recording devices are simply off-limits. "There needs to be something more than just a pamphlet that tells you to be mindful of your use. That phrase can mean anything to anyone. Some spaces must stay private—banks, hospitals, schools—places where cameras don’t belong. There should be a simple rule: put the gadget in a locker before you walk in."