Firas Jarboui, Head of Machine Learning at Gorgias, explains how to secure Agentic AI browsers by gating actions and segregating context from workflows.

The shift we're going to see with agentic AI browsers is that the discovery is going to be processed by the agents themselves.

Agentic AI browsers are fundamentally changing how we interact with information. Unlike chatbots, these agents can retrieve, aggregate, and act on information, magnifying both their utility and their risk. But the risk doesn't come from the technology itself. Instead, it’s in how these agents are used, governed, and designed. Meanwhile, as enterprises grant these autonomous agents deeper access to files, APIs, and system tools, they create new threat vectors, like prompt-injection attacks and data leakage.

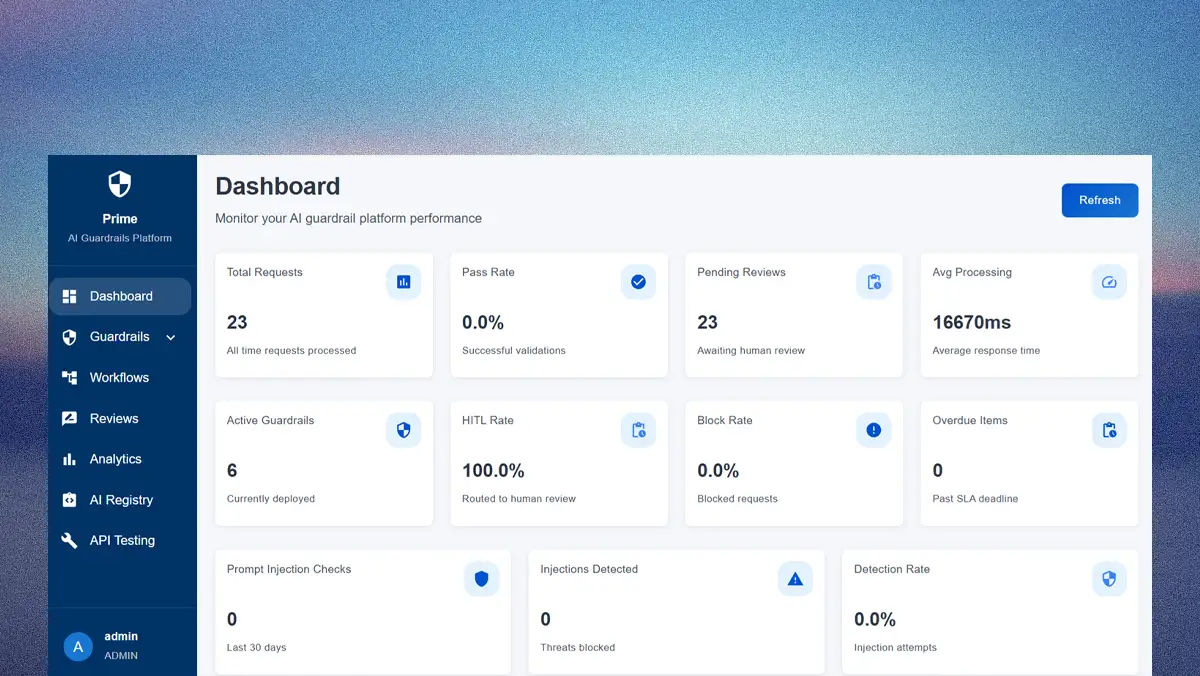

At the forefront of this change is Firas Jarboui, the Head of Machine Learning at Gorgias, where he leads AI initiatives that automate millions of daily interactions for 15,000 e-commerce businesses. Backed by a PhD in Mathematics & Machine Learning, years of experience in this high-stakes environment have given Jarboui a pragmatic blueprint for scaling AI safely.

"The shift we're going to see with agentic AI browsers is that the discovery is going to be processed by the agents themselves," Jarboui says. In this nascent space, many are simply "throwing darts in the dark," he explains. To cut through the uncertainty, he offers a framework that begins with a key distinction.

For decades, online discovery has been user-driven, Jarboui continues. On Google, users get a ranked list and judge for themselves what’s trustworthy. But AI browsers—powered by frameworks like Retrieval-Augmented Generation (RAG)—flip this model on its head.

Summary judgment: With summarization solved, the real challenge becomes source selection. "The part we must be careful about is discovery, which dictates decision-making in tomorrow's world," Jarboui advises.

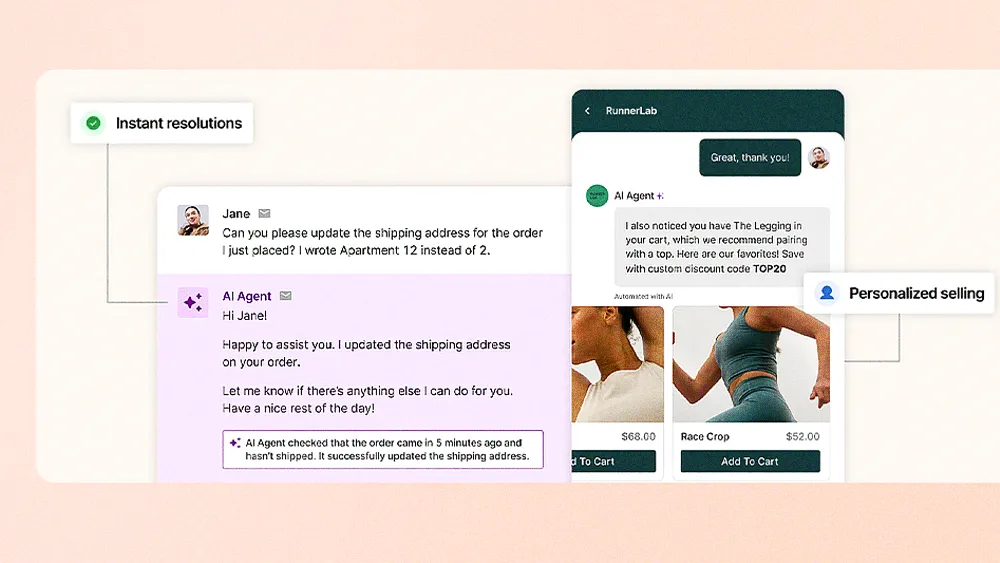

Cite your sources: Now, the agent decides which sources to consult and aggregates the findings. "Did it find this information in a subreddit?" Jarboui asks. "Did it find it on Wikipedia? Or did it go through academic articles to reach this conclusion?"

Meanwhile, commercial implications compound the issue. "If you're shopping, you need to ensure the end user is aware of the source," Jarboui says. For instance, an agent might prioritize low-cost merchants over a user's preferred brands. "Did the agent go to the brand I'm usually interested in? Or did it go to a low-cost provider to get that information?"

Such architecture demands a new security posture, Jarboui continues. Unauthorized data exposure isn't a new threat, but contextual enforcement presents a fresh challenge: "The hard part is deciding, based on business context, which actions to gate."

Context control: Low-probability, high-impact edge cases often pose far greater business risks than routine performance issues, Jarboui explains. "Which actions should never be executed without user validation? And what information should be gated from even entering the context of the LLM?"

Buyer beware: Ungated access also invites disaster. "If we try to prompt our way in, yes, it will work 99.99% of the time," Jarboui says. "But there is always the risk of the LLM hallucinating and buying you a car you never asked for."

As browsers become embedded in workflows, users need visibility into how they operate. Instead of a new playbook, Jarboui suggests reframing the problem in more familiar terms.

Business as usual: Vulnerabilities emerge over time in all software, he explains. "Even when we release code, there are vulnerabilities we didn't realize existed. It's the usual game: people try to hack your agents to test your protection. Then data leaks, you get bashed on Hacker News, and you have to fix the bug." Preparing for this cycle in advance can help product engineering leaders stop fearing it.

To navigate this shift, Jarboui points to emerging patterns in "context engineering." By separating AI knowledge from prompts, the approach uses deterministic pipelines to control the flow of data. Consequently, two camps are forming in response: One follows this deliberate philosophy. The other champions a "plug-and-play" approach. "There is another paradigm on the rise where we say, 'Don't even think about it,'" Jarboui says. "Just plug the agents into all the tools, prompt whatever you want, and hope it will be safe."

Ultimately, only one has a sustainable future, Jarboui concludes. "I think the second camp, even if it's slower to market, will be the one that survives. Both expose the same value, but one risks a very damaging interaction with its customer base." In his experience, the companies that prioritize a deliberate architecture will succeed. "Adopt a pattern where you segregate context from the workflow. Use deterministic decision pipelines whenever you can, and everything will be safe downstream."