Shane Culbertson, AI Strategy and Governance Leader supporting the U.S. Department of Defense, explains why ethical foresight is essential to prevent enterprise AI systems from causing reputational, regulatory, and human harm.

Ethical foresight is about anticipating harm before it happens. You have to gather your developers, domain experts, and an AI ethicist and ask one simple question: ‘What is the worst plausible thing that could happen with this system?’ Foresight isn't a luxury anymore. It's insurance against reputational disaster.

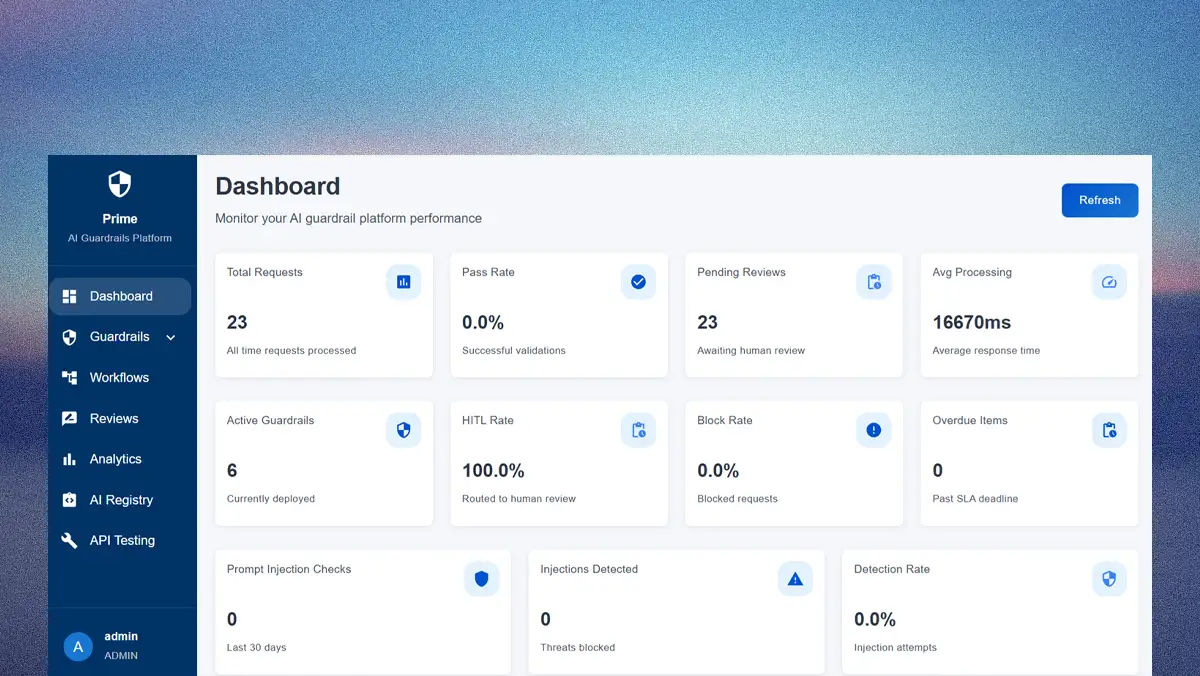

Enterprises are missing yet another essential layer of infrastructure for AI. Ethical foresight is the newest foundation experts say is critical to prevent intelligent systems from causing reputational, regulatory, and human harm. While many organizations claim to have it, the frequency of high-profile system failures suggests otherwise. Now, instead of treating ethics as a final-stage checklist, leaders must make it an integral part of engineering, built into assembly lines from the start.

But building this infrastructure also requires an expert's perspective. To learn more, we spoke with Shane Culbertson, an AI Strategy and Governance Leader supporting the U.S. Department of Defense, who spends his days ensuring intelligent systems work safely and effectively across defense environments. Today, he manages multimillion-dollar AI and data integration initiatives where the consequences of failure are far more catastrophic than a negative press cycle. Culbertson's 25 years of practical experience, combined with his doctoral research on ethical AI adoption, offer a rare, pragmatic view on how to build systems that are both powerful and responsible.

A proactive shield: The exercise is not just for executives, Culbertson explains. It requires bringing together a diverse group of stakeholders to prevent the human consequences of technology from being overlooked. "Ethical foresight is about anticipating harm before it happens. It’s a proactive leadership approach, similar to threat modeling in cybersecurity, but here the threat is ethical failure. You have to gather your developers, domain experts, and an AI ethicist and ask one simple question: ‘What is the worst plausible thing that could happen with this system?’ Foresight isn't a luxury anymore. It's insurance against reputational disaster."

A proactive approach begins with establishing clear, institutional accountability, he continues. The first step is to empower leaders, distribute responsibility, and build internal expertise.

From performance to accountability: A two-pronged structural approach can make accountability operational, Culbertson says. He describes how to combine top-down leadership with horizontal integration to create a system of checks and balances that breaks down organizational silos, where ethical risks often hide. "Good intentions aren't enough. Ethical AI requires institutional implementation and leadership accountability. Appoint a Chief AI Officer, but give them real authority to pause or escalate risky systems. Then, create cross-functional governance teams with members from legal, risk, engineering, and HR. That gives you a 360-degree review so problems are caught while a tool is being built, not after it's already causing harm."

With accountability established, the focus shifts to process. Here, Culbertson outlines a structured workflow in which ethical reviews are mandatory checkpoints at every stage: Project Planning, Design, Development, Testing, Pre-Deployment, and Post-Launch.

The go/no-go gate: Formalized reviews are the mechanism that gives an ethics officer's authority real teeth, Culbertson clarifies. It makes ethics a non-negotiable requirement in the development pipeline. "Ethical review can't be a one-time event. As a model learns from new data, it must be continuously monitored by someone with the authority to adjust it if necessary. If you don't, the damage escalates as the AI learns from bad data and produces bad outputs. You need a mandatory ‘go/no-go’ gate at each stage of development. It cannot be just a suggestion."

Defending against bias drift: A data-driven process is the only way to withstand the business pressure to move fast, he says. By making fairness measurable, leaders can justify delays with objective evidence, while the bias log builds institutional knowledge to prevent recurring mistakes. "Biases aren't static. They evolve as models, data, and deployment contexts change. To defend against this, you must treat edge cases as a recurring audit item, set quantitative fairness thresholds to define what counts as ‘too much’ bias, and maintain a bias memory log so your organization doesn't have to keep relearning the same lessons."

But process and metrics are only as strong as the culture that upholds them, Culbertson says. Here, he champions a set of human-centered practices that make ethics a living process by embedding foresight directly into an organization's DNA.

Building a culture of foresight: "In an ethical risk workshop, you bring cross-functional teams together to ask hard questions: What is the worst plausible way this system could harm someone? Who benefits most from this AI, and who might be ignored or marginalized? Could a bad actor misuse it on purpose?"

A diverse review committee: To break the engineering echo chamber, create a diverse committee to ground decisions in a human context, Culbertson advises. "You should create a standing AI ethics review committee for all high-impact systems. Before any go/no-go decision is made, the system must undergo its review. The committee must include at least one non-technical member and one external-facing role, like a customer support leader, to make sure you're getting the full human context."

Adversarial testing: A "break it to fix it" approach proactively stress-tests the system, he explains. Forcing failure in a controlled environment enables teams to identify and rectify vulnerabilities before they cause harm in the real world. "You need to conduct red teaming for ethics, just like in cybersecurity. A dedicated ‘red team’ tries to trick the system into producing harmful or biased outputs by pushing edge cases and using adversarial inputs. You then document every failure and feed those cases back to retrain and harden the model."

Such a framework is not a theoretical ideal, however. It is a proven, operational reality in one of the world's most high-stakes environments, as Culbertson's work illustrates. Instead of simply trusting its vendors, the U.S. DoD actively participates in their development cycles to enforce accountability, he says. "Rigor is enforced through formal acquisition processes such as the Joint Capabilities Integration and Development System (JCIDS), which help ensure mission-critical systems meet safety, ethical, and operational standards before deployment."

Ultimately, the contrast between this process-driven governance and the private sector's "time-to-value" focus is a powerful argument for why this infrastructure is essential, Culbertson concludes. "This is exactly what we do at the Department of Defense. We participate in ethical foresight and AI reviews with our vendors to ensure they meet these standards of accountability. It’s our job to make sure these models are safe before they are deployed, especially for systems that influence targeting, intelligence analysis, human-machine teaming, and information warfare."