Joseph Sack, CEO of Smart Tech Solution LLC, explains why the primary security risk for agentic AI browsers is human behavior and how defensive AI tools can help.

The responsibility is on the people using the tool, not the tool itself. If you put AI in the wrong hands, that’s where the risks apply more than the actual browser.

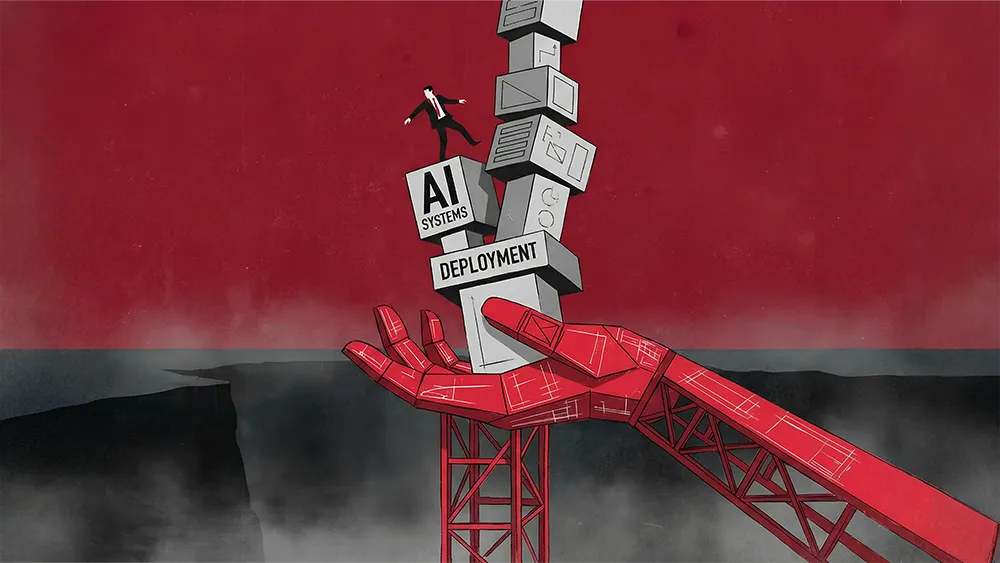

The arrival of agentic AI browsers has most security leaders talking. Yet many of these conversations may be mischaracterizing the most significant risk. Now, instead of old-school malware or living-off-the-land attacks, the primary threat surface is human.

For an expert's take, we spoke with Joseph Sack, Founder and CEO of Smart Tech Solution, a firm specializing in cybersecurity, IT consulting, and infrastructure management. Today, Sack is also the founder of SubversiveTEK, a nonprofit research and development organization committed to advancing disruptive technologies.

According to Sack, the problem isn’t with the tech—it’s with human behavior. "The responsibility is on the people using the tool, not the tool itself," Sack says. "If you put AI in the wrong hands, that’s where the risks apply more than the actual browser."

Intuition is obsolete: Today, adversaries use AI to generate attacks indistinguishable from reality, Sack continues. As a result, even well-meaning advice like "don't click suspicious links" can be dangerously ineffective. "You can't punish an 80-year-old lady for clicking on an email that looks like something she's seen in the past because it was AI-generated," Sack says. "That's just not fair. We're not psychic—we can't see those tiny, hidden links."

Talk is cheap: For example, he points to a recent ransomware attack: "They called the IT help desk and impersonated an employee and convinced them to reset a password and bypass multi-factor authentication." And with the advent of deepfake voice technology, he cautions that this "cultural vulnerability" now extends to text, audio, and video.

For Sack, the solution starts with containment. "Restricting local access and keeping the isolation of agentic browsers within the browser itself is key to preventing them from posing a serious security breach."

Sandbox or bust: The approach begins with rigorous, sandboxed testing using representative data to understand what happens when containment fails. "If an AI model can open your Outlook calendar on your Windows machine, it has gone outside the browser. What else can it do?" Sack wonders. "Can it trick a Windows resource into opening a command line or using a living-off-the-land technique with PowerShell? That's the biggest concern."

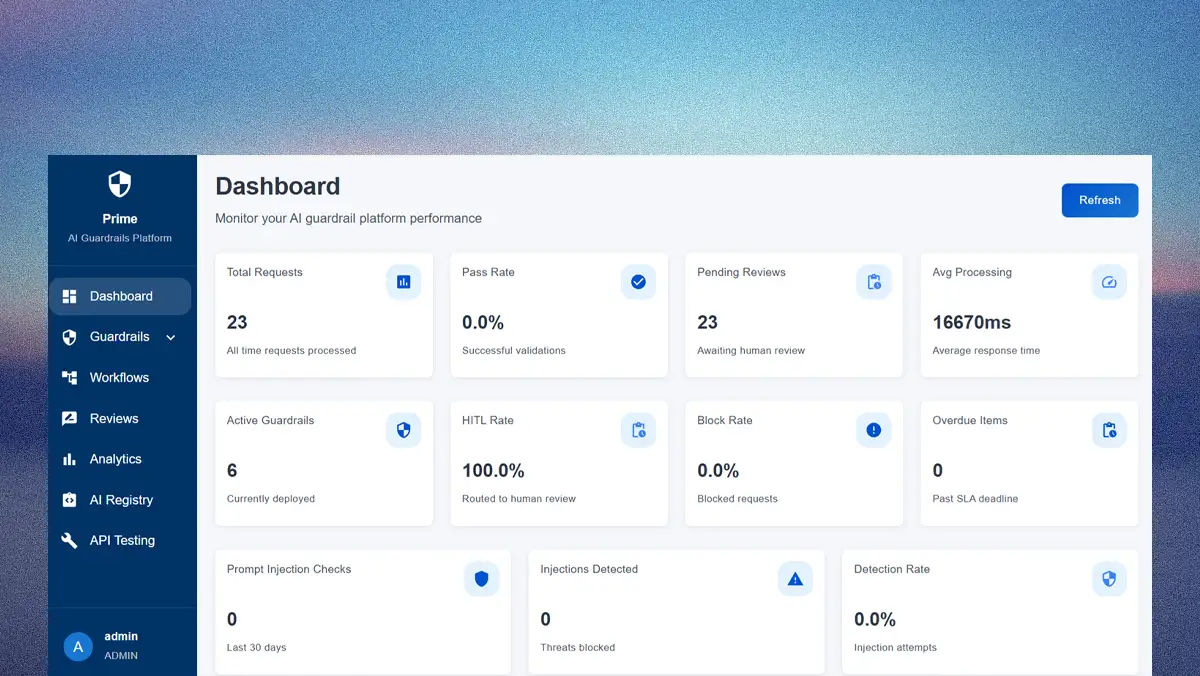

Ironically, AI is both the poison and the antidote, Sack explains. Because the human eye is no longer capable of detecting these threats, he recommends a "hybrid approach" that uses defensive AI to fight offensive AI. But implementing this hybrid model isn't just a technical challenge.

Shiny object syndrome: One major roadblock to secure AI adoption is a cultural tendency toward "impulsive adoption," Sack continues. For him, treating enterprise AI like consumer tech seems reckless. "These agentic browsers feel a little too impulsive. You can't just throw AI out into the market like it's the latest cell phone. It needs to be tested first."

Hype over hygiene: Often, the issue is driven by marketing hyperbole and by executives who lack the engineering context to properly vet the tools they buy. "I don't think any CISO should be in their position unless they have an engineering-level understanding of how the technology works," Sack says. "We're allowing people to assume roles they're not ready for, and that's the biggest blind spot of all."

Ultimately, Sack’s analysis points toward a hybrid future: technically literate leaders deploying defensive AI tools within carefully architected sandboxes. "The fundamental issue is that people don't understand what they're doing with these new tools. They're using them like typical browsers, and these are not typical browsers."

Yet even with perfect technology, Sack concludes, the final line of defense remains forever shaped by a fundamental paradox. "You can't really know what's truly sensitive unless that AI model has been trained with that sensitive data, which is a security issue in itself. So it all falls back onto the education of the end users."