Mahesh Varavooru, Founder of Secure AI, warns that Shadow AI creates a hidden two way risk loop and calls for runtime guardrails and sanctioned sandboxes to secure enterprise innovation.

When employees copy internal data into tools like ChatGPT or Gemini, you’re not just dealing with shadow IT anymore. You’re creating data leakage, IP exposure, and decision contamination that can flow straight into critical business systems.

Shadow AI has opened a two-way pipeline of enterprise risk, where sensitive data flows out to public models and unvetted outputs flow back into core systems. Employees paste proprietary information into tools like ChatGPT, then feed AI generated responses into business workflows that leadership assumes are sound. Traditional controls were never designed for this loop, and what looks like a productivity shortcut is fast becoming a board-level exposure.

Mahesh Varavooru is an AI and cloud technology leader who has built his career on the front lines of enterprise innovation. As the Founder of Secure AI, a company focused on AI security and governance, Varavooru brings a practitioner's perspective. His insights are informed by nearly fifteen years of experience and a track record that includes founding a previous AI consultancy with a successful exit and leading cloud strategy for global organizations like Chubb and Morgan Stanley.

“When employees copy internal data into tools like ChatGPT or Gemini, you’re not just dealing with shadow IT anymore. You’re creating data leakage, IP exposure, and decision contamination that can flow straight into critical business systems,” says Varavooru. This creates a new frontier of unseen risk, prompting a 30-fold increase in corporate data being sent to generative AI tools.

Copy, paste, consequence: But the problem moves far beyond simply using an unapproved tool. The risk lies in the exchange where proprietary data is fed into external models and their unvetted outputs are injected back into core business processes. "When an employee takes internal data, pastes it into a public tool like ChatGPT for a summary, and then applies that output somewhere else, that action creates both data leakage and decision contamination," says Varavooru. "They are contaminating internal decision workflows with unvalidated information, because you simply don't know what the AI gave you back."

Trust but can't verify: A core part of the issue is a validation challenge. AI-generated content introduces a layer of abstraction that can make verification difficult without dedicated systems. "In the past, if you used an unapproved tool, you could still manually validate the output. A fundamental difference with generative AI is that validating the information you get back can be exceptionally difficult unless you have specific evaluation and validation layers in place." This, Varavooru says, is the core risk of shadow AI, a fundamental departure from past forms of shadow IT where outputs could often be manually checked.

Unsanctioned solutions: But why are employees taking these risks? Often, the behavior is a byproduct of an organizational disconnect, where the executive push for AI-driven returns can contribute to a culture where employees, blocked from sanctioned innovation, turn to unsanctioned solutions that lack basic enterprise security. "Even when tools are available, people still go to ChatGPT or Gemini, and on top of all the existing cybersecurity issues, you are now adding this and increasing your attack surface," says Varavooru.

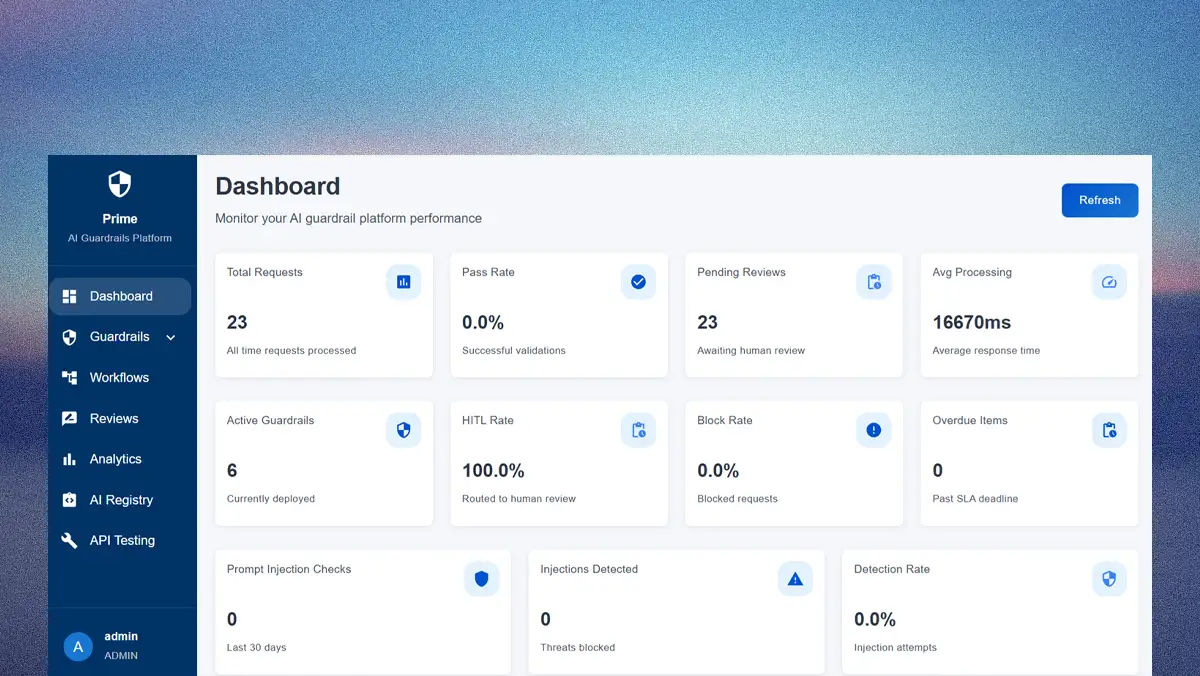

So how do you fix it? Varavooru’s strategy begins with a simple premise: "you can’t manage what you can’t see." It's a multi-layered approach that moves from basic visibility to automated AI security and governance, making a full accounting of all AI usage the foundational starting point.

Know your network: "The first step is to create a centralized AI inventory and implement active monitoring at the firewall level. That active monitoring gives you the data to generate reports identifying the 'top 10 users' in each department. That is how you expose the gap and understand the true scope of your problem," says Varavooru.

Guardrails over gadgets: Once you can see the problem, the next step is control. While tools like enterprise browsers offer a layer of control, Varavooru emphasizes that the foundational principle of real-time policy enforcement is a key factor for effectiveness. "The rule should be simple: if an employee tries to access an external site like chatgpt.com from within the enterprise network, the guardrail should block them. That kind of technical enforcement," he argues, "is the point."

From static to strategic: A better solution, Varavooru suggests, is to implement adaptive governance. He says that in a world of ever-changing regulations and a complex global cybersecurity environment, a framework must be able to embed automated compliance directly into its runtime guardrails, rendering static "set it and forget it" policies increasingly ineffective. "Consider this scenario: an organization reviews an AI system against a regulation, puts it into production, and it runs for months. But then the regulations change. How do you ensure that live system isn't violating the new rules?"

But technical controls alone aren't a silver bullet. They are most effective when paired with an equally robust cultural infrastructure. Varavooru advises that leaders provide safe reporting and experimentation channels to encourage employees to disclose AI usage early. "This creates visibility into what tools are being used and promotes innovation within a governed framework. It's an opportunity conversation, not a restrictive or disciplinary one."

Ultimately, the most effective path forward involves a fundamental reframing of the entire conversation, to transform AI governance from a bureaucratic hurdle into a strategic enabler of safe, responsible innovation. This concept is echoed by other experts who advise creating sandboxes for experimentation. "The leader should frame shadow AI as a shared enterprise risk and opportunity conversation. It's not a disciplinary or restrictive initiative. It's a communication that emphasizes that governance exists to enable safe AI adoption, not to slow innovation. Governance is an enabler, not a blocker," he says.