Back to New Tab

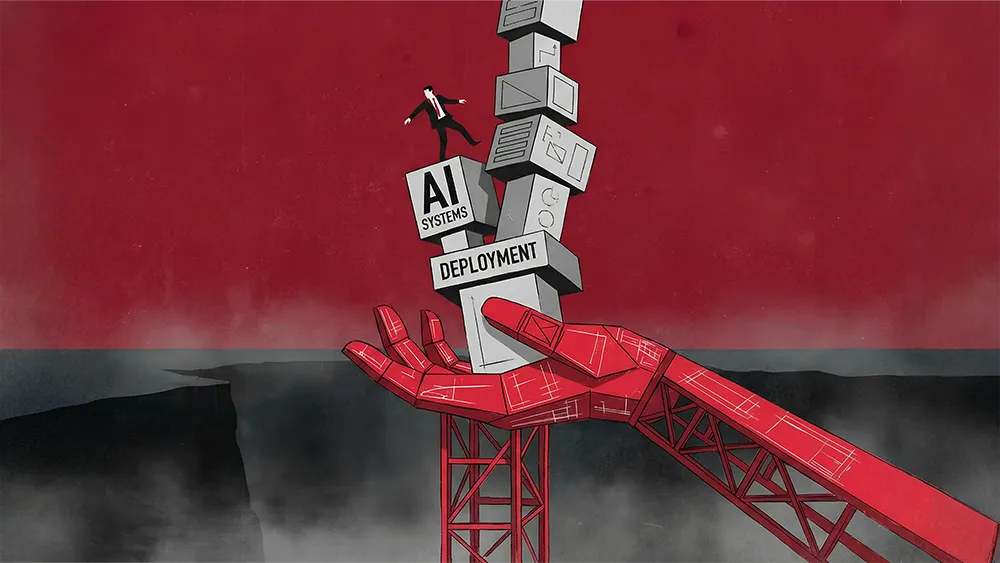

Clear Accountability Structures Reduce Risk, Anchor AI Deployment In Real Decision Workflows

AI

Artur Walisko, Founder and Architect of LLM Studio, argues that the AI deployment gap is an architectural failure, not an adoption problem, and that governance must be built into AI systems as a structural layer before models reach real decisions.

The AI deployment gap doesn't start with users or adoption. It starts the moment systems enter real decision environments without clear ownership or accountability.

Most organizations treat AI deployment as a finish line. Models ship, users are trained, and the project moves to maintenance. But the actual risk begins at that exact moment, when AI systems start shaping real decisions inside workflows where no one has explicitly defined who owns the evolving outputs, how context is preserved or constrained, and what happens when downstream judgment gets shaped without anyone noticing.

Artur Walisko is Founder and Architect of LLM Studio, an AI governance and decision analysis practice focused on designing accountability structures for automated and language model-based systems. His work sits at the intersection of technical architecture and organizational decision-making, with a focus on identifying where AI begins to influence human judgment without adequate oversight.

"The AI deployment gap doesn't start with users or adoption. It starts the moment systems enter real decision environments without clear ownership or accountability," says Walisko. That gap, he notes, is consistently misdiagnosed. Because model capability is visible while architectural responsibility is not, organizations default to treating the problem as one of training or user experience. The real issue is structural.

Capability masks the gap: "The issue is not that people underuse AI," Walisko explains "It's that systems are deployed without structures that support sustained, accountable use." No one explicitly owns how decisions evolve over time, how context and memory are preserved or constrained, or who is accountable when outputs shape downstream judgment. Those are architectural questions, not onboarding questions.

Governance as architecture: Walisko defines governance not as policy or regulation, but as a systems layer. "Governance defines where authority, escalation, and stop-conditions live inside a system." When that layer is absent, standardization accelerates risk rather than reducing it. Systems scale faster than responsibility. "What breaks first is not performance, but accountability. Decisions become diffused, ownership becomes unclear, and human operators are left managing outcomes they do not fully control."

The result, he says, is counterintuitive. Rather than removing humans from the loop, poorly governed AI traps them inside it with blurred responsibility and increased cognitive load. That dynamic becomes particularly dangerous when AI outputs subtly shape judgment rather than replacing it outright.

Stabilize, don't steer: Walisko draws a clear line between AI that supports cognition and AI that quietly directs it. "The most dangerous AI systems are not those that replace human decision-making, but those that subtly shape judgment without clear boundaries." Protecting human agency, he says, requires deliberate design. "AI should stabilize cognition, not steer behavior. Outputs must be clearly framed as support, not conclusions. Escalation paths must exist when confidence or context thresholds are crossed." In his own work, AI functions best as an organizing layer that maintains continuity of thought while leaving authority explicitly with the human.

Making influence reversible: Agency is not protected by eliminating AI from the decision process, but by making its influence visible, bounded, and reversible. Walisko says this requires designing systems where the operator can always see where the AI contributed, override its framing, and trace the path from input to output. Without that transparency, what looks like human judgment may already be shaped by the system producing it.

Organizations that deploy AI successfully over time share a specific set of architectural principles that distinguish them from those that stumble after launch:

Responsibility is explicit, not assumed: Ownership of decisions does not end at deployment. Someone remains accountable for how the system behaves as conditions change, data drifts, and users adapt their workflows around AI outputs.

Memory is designed, not emergent: What the system remembers and what it must forget is intentional. Walisko warns that unmanaged memory creates compounding risk, where today's outputs become tomorrow's context in ways no one anticipated or approved. That principle carries particular weight as regulatory frameworks begin to formalize expectations around model transparency and data handling.

Authority is layered: AI can suggest and organize, but escalation and final judgment remain human. This principle prevents the gradual drift where operators begin to defer to system outputs not because they agree, but because overriding them requires effort that the workflow no longer supports.

Most importantly, Walisko says, these organizations treat AI as infrastructure rather than as a tool. "They design for continuity, degradation, and failure, not just for performance at launch." That distinction determines whether AI scales responsibly or quietly accumulates governance debt that compounds with every decision the system touches.